Updated 14/03/20

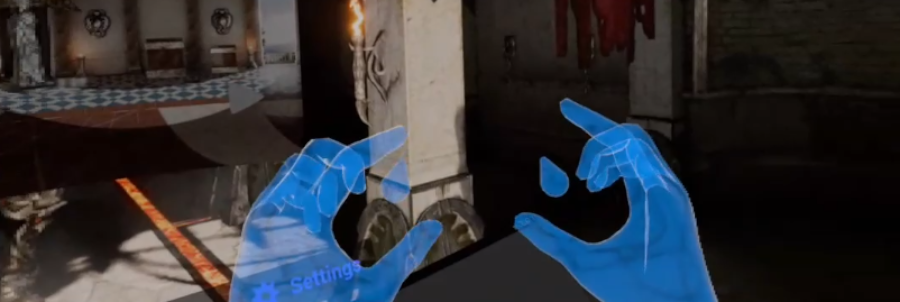

Oculus Quest Hand Tracking - Developer Insights

After implemented hand tracking on my Oculus Quest game, Jigsaw 360, I wanted to share what I had learned and give my honest impression of the technology. There are plenty of design consideration to be aware of but the potential is there to create some awesome experiences.

Try the free demo of Jigsaw 360 to see what you think of my implementation.

The following video covers some of the content in this article but I will keep adding to this page over time.

Technical review

Hand tracking uses the tracking cameras on the Oculus Quest to determine the position of your fingers inside VR. This works surprisingly well in my opinion, although the lighting and your environment may be a factor in your own experience.

Latency is very low, giving you the feeling that the in-game hand is your own. Seeing your fingers move as expected certainly adds to immersion.

The tracked area where your hands are detected is quite broad. While finger positions may not be as accurate as you move your hands away from the optimum center of view, the range is still impressive.

An important feature is that you can swap between hand tracking and controller tracking while in a game. Simply put the controllers down to initiate hand tracking and pick them up and press a button to switch back to controllers. I value this feature because it gives the player a quick fallback if their current environment is making hand tracking unreliable.

Early standards

Pinch select

Palm to face with pinch

While this tech screams for new innovative applications, it's important for some standards to emerge and Oculus has made a good start with that.

Pinch Select

Pinching with the finger and thumb is used to select items in Oculus menus, so it's advisable to carry that into our experiences, certainly for interacting with user interfaces. I also chose to use it as the grab mechanism to pick up jigsaw pieces.

When dealing with user interfaces at eye level, the position of your finger and thumb can be easily tracked so the pinch as an ideal select mechanism. However, when you start to rotate your hand it's harder for those digits to be reliably detected as they can obscure each other, so Oculus has to make some assumptions. In my game this resulted in puzzle pieces being regularly dropped and then picked up again. I worked around this to some degree by testing if the finger and thumb where obscuring each other from the players eye line. While obscured, a weak pinch is still considered to be in the pinched state. This helped a little but I also recommend that players close their 3 lower fingers as this also increases the accuracy on the pinch detection.

It seems that Oculus use the position of the lower three fingers to determine the position of the index finger when it is obscured by the thumb. Hopefully, this is something that will improve over time (it's still early days).

Palm to face with pinch

This mechanism allows you to call up the Oculus dashboard. In my game, when the user looks directly at the palm of their hand, the hand turns grey (as in the Oculus dashboard) to show that it is in the appropriate position to make the call to Oculus. I suggest other developers consider this approach, otherwise accessing the dashboard feels a little hit or miss and could be done accidentally.

Detached interactions

Tracked hands tend to jitter. If you attached objects directly to the hands you may amplify that effect, creating an unpleasant experience. Fortunately, Oculus have also thought of this. A floating bulb follows the hand with a smoothed motion. This provides a more stable point of reference and is why driving user interfaces is quite reliable. The bulb also helps to visualise your pinch strength, which is a nice feature.

I was already a fan of detached interactions due to many other benefits it brings (a topic for another blog post). My Jigsaw pieces follow the bulb in a detached way too, further smoothing motion. I have a beam that extends from the bulb to the object you are interacting with. This allows the pieces to automatically change orientation and vary their distance from the hand as they snap into their required position in the puzzle. It feels more like using force powers to assemble the jigsaw rather than holding pieces in your fingers.

I use my beam for all interactions to ensure consistency:

1) Interact with distant user interfaces

2) Remote-grab jigsaw pieces from the tray

3) Pick up items that are close to your hand

I'm not suggesting this should be a standard approach, but it's worth being aware of.

Hand tracking Vs controller tracking

As enthusiasts it's easy for us to get excited about new tech and be forgiving of the limitations and reliability. However, general users expect proven, robust solutions.

Tracking of controllers is very reliable and detection of button presses is certain. The experience is solid and frustration free. Unfortunately, the same can't be said for hand tracking which has a number of potential frustration points:

* One hand obscuring the other causes glitches

* Hands may glitch as they go out of range

* Pinch and release cannot be as certain as a button press.

So, does that mean hand tracking won't ever make it out of beta. I certainly hope not.

It's already in a good state to navigate user interfaces, browse media and to be used in social experiences.

Using the tech in games is more challenging, but that's OK because VR developers love challenges :)

Design considerations

We need to be able to instantly differentiate glitching from intended movement so we can temporarily disable physics and other interactions.

Rather than just rely on the Oculus API, developers may need to extend the default logic that controls finger positions (as I did with the pinch processing) to meet their own needs and challenges.

Users will randomly fidget when not interacting so be aware of unintended pinch detection. When grabbing jigsaw pieces I require the users to pinch-and-hold to try and reduce accidental selections.

Test where users are looking and only enable interactions when the target is in their eyeline.

Try to ensure that interactions are performed closer to eye level than waist level. This results in users having to raise their hands to interact and reduces accidental interactions.

I'll keep adding to this list as more come to mind.

Credits

Jigsaw 360 uses Unreal Engine which at the time didn't include proper support for hand tracking. Thanks to Ryan Sheffer for his plugin which made this much easier to integrate.

Quest Hand Tracking Plugin available here

Closing thoughts

Just like the best VR games are built from the ground up for VR, the best hand tracked games will be those built specifically for hand tracking. They will build the limitations of the tech into the gameplay and narrative and ensure all interactions avoid the potential frustration points.

I'm sure awesome hand tracked games are possibly and I hope it promotes new gameplay ideas.